Inside look: How Big Tech is using generative AI to win over finance

Execs from Amazon, Google and IBM explain their capital markets strategy when it comes to rolling out new AI tools.

When the world looks back on the year 2023, a few things may immediately come to mind. They may include wildfires around the world, the Titan Submersible tragedy, Donald Trump’s record number of indictments for a former US president, or the coronation of King Charles. But 2023 may also be remembered as the year that artificial intelligence was the topic everyone couldn’t stop talking about.

To be more specific, it’s generative AI that has captured people’s attention. It started with ChatGPT at the end of 2022, and has since snowballed. A recent study from Gartner put generative artificial intelligence at the peak of inflated expectations for the 2023 hype cycle. It also projected the technology could reach transformational benefit within two to five years. It has captured the attention of almost every industry, from healthcare to education, and capital markets can be included on that list.

In January, it wasn’t entirely clear how it could be adopted within the capital markets, but industry participants had told WatersTechnology that as the tech evolves and hurdles get cleared, everything from the analyst’s role to the way critical infrastructure is built could be disrupted along the way. Since then, both data vendors and software providers have released everything from specialized chatbots to their own large language models. Even as bank compliance departments remain wary of this evolving form of machine learning and natural language processing, innovation in this space is ramping up, and it’s clearly not slowing down.

And leading the charge are the major cloud providers. While Microsoft clearly had a head start with their OpenAI partnership, Amazon Web Services (AWS), Google, and IBM have all rolled out their own generative AI offerings in the last few months. Second quarter earnings calls from all four laid bare that they see generative AI as an important component of their strategies going forward.

For Monica Summerville, head of capital markets technology research at consultancy Celent, this move makes sense, and it can tied back to one simple thing—data.

“As a cloud provider, I think this is absolutely something they’re going to have to do. At the end of the day, they’re trying to increase the amount of compute and services they offer that runs through the cloud,” she says. At the heart of that is increasing the amount of data that is in the cloud.

Over the last few weeks, WatersTechnology spoke to three of the four Big Tech providers—Google, AWS, and IBM—to understand how they are thinking about and approaching generative AI and how it fits into their pre-existing strategies around the cloud. (Note: When reached for participation in this story, Microsoft declined an interview and sent WatersTechnology a statement regarding their generative AI strategy. More information on how Microsoft is approaching AI can be found here.)

Zac Maufe knows a thing or two about data. Prior to his role as the head of regulated industries at Google Cloud, he spent 15 years at Wells Fargo, where he served in several roles, including chief data officer. He hasn’t strayed far from data since.

Regulated industries at Google cover multiple areas, including healthcare, aerospace, manufacturing, and financial services. Maufe says he spends his time within financial services figuring out what technology within Google is best applicable for payments, banking, insurance, and capital markets. “Because Google is a data company at its heart, most of that leads back to data challenges and then AI and machine learning as tools to get insights out of that data,” he says.

%20headshot64a7.png)

AI isn’t unfamiliar territory to Google. The company has long been involved in research around the technology. According to reporting from the Washington Post, they have published 500 studies since 2019 but ceased sharing at the beginning of this year due to emerging competition. One Google study from 2017 introduced transformers, a key element in the recent developments around AI. Tools like Meena and BERT (which stands for Bidirectional Encoder Representations from Transformers) were successful in demonstrating the potential of natural language processing.

That potential has brought us to this moment with generative AI and large language models and Google, like others, is eager to provide the right tools.

As a part of Vertex AI, Google Cloud’s AI experimentation platform, users have access to the model garden. The model garden includes foundational models developed by Google as well as open-source models, and models from third-party providers. Google’s models include PaLM for both text and chat, Imagen for image generation, and Codey for code completion. Open-source models include BERT, among others.

During Google’s second quarter earnings call last month, chief executive officer Sundar Pichai spoke about the importance of providing options. “[With] the cloud, we’ve really embraced open architecture [and] we have embraced customers wanting to be multi cloud when it makes sense for them,” he said. “So similarly, you would see with AI, we will offer not just our first party models, we will offer third-party models, including open-source models. I think open source has a critical role to play in this ecosystem.”

These tools have been integrated to sit on top of the current Google Cloud stack, Maufe says. “If you have data on Google Cloud, you can start experimenting very quickly.”

AWS

You can’t think about Amazon without thinking about products like Alexa or the Amazon shopping experience. Jim Fanning, director of global financial services for Amazon Web Services, points to those products as evidence of Amazon’s work around machine learning and artificial intelligence.

“We’ve been at it for 20 years,” he says.

With generative AI in what Fanning describes as “early days,” AWS is looking to offer the foundation for AI deployment within the AWS environment. In April, AWS launched Bedrock, a platform that allows the scaling and building of AI applications from foundation models provided by both Amazon and third-party providers. Those foundational models are accessed through an API, and users can then connect their data to these models without training them.

The models available currently include Amazon’s own Titan as well as others from AI startups like Anthropic, Stability Diffusion, and AI21 Labs.

“Our approach is saying there is not going to be a single foundational model to rule them all,” Fanning says. “When it comes to foundational models, the more granular you can get in terms of determining the task and the answers you want to drive, the more accurate it’s going to be.” He says he anticipates more foundational models being developed with both specific and broad focuses.

Among generative AI’s proposed use cases is coding. AWS’s offering in this space is Code Whisperer, a coding companion trained on both open-source code and Amazon’s own codebase that suggests code segments based on natural language inputs. Fanning, who started his career as a developer, says the offering has the potential to speed up producing time for developers while keeping them in the loop. “They’re continuing to write code, they’re continuing to look at what Code Whisperer is generating and matching and saying, ‘Is that going to do what I want it to do?’” he says.

Speaking at the AWS Summit in New York last month, Swami Sivasubramanian, VP of databases, analytics, and ML at AWS, described generative AI as being at a tipping point. “The convergence of technological progress and the value of what it can accomplish today is because of the massive proliferation of data, the availability of extremely scalable computing infrastructure and the advancement of ML technologies over time,” he told a packed crowd at Manhattan’s Javits Center.

He also stressed the importance of democratization in AWS’s strategy. “Customers need the ability to securely customize these models with their data and then they need easy-to-use tools to democratize generated AI within their organizations and improve employee productivity,” he said.

IBM

The year is 2011 and IBM, the company most known for the mainframe, has introduced its next signature innovation: Watson. Created to answer questions on the iconic trivia show Jeopardy!, the question and answer computer system was an eye-catching embodiment of what technology might be able to do down the road and how humans may interact with it. Keeping in line with its original purpose, the system faced off against Jeopardy!’s greatest champion, Ken Jennings, and easily won.

A decade later, Watson is still engrained in how IBM approaches AI. In May, IBM announced Watsonx, and last month, the platform became available for use.

Watsonx is comprised of three major components: watsonx.ai, watsonx.data, and watsonx.governance. In watsonx.ai, users can train, validate and deploy AI models on a faster scale. Models from HuggingFace are available, as well as IBM-trained foundational models. Watsonx.data allows access to data across a number of environments, including the cloud or on-premise. With this component, data does not leave the environment it sits in, but the AI technology goes to where the data sits. In watsonx.governance, AI activities across an organization can be monitored to meet regulatory and ethical requirements. This feature will be generally available in November.

“We added an x on Watson because we wanted to signify the evolution of AI that is open, trusted, targeted, and empowering,” says John Duigenan, distinguished engineer and general manager for the global financial services industry at IBM. “Open in the sense that we are building an open community of model builders, all of whom can combine and provide their models to our platform; trusted meaning bias free and explainable; targeted specifically for the enterprise and enterprise use cases.”

Duigenan stresses that consumer-grade AI will not work for the enterprise segment, and the AI they provide customers must be both explainable to the internal business and to regulators.

IBM chief executive officer Arvind Krishna highlighted Watsonx use cases during the company’s second quarter earnings call last month. One example he highlighted is Citi’s pursuit of using large language models (LLMs) for connecting controls to internal processes. Meanwhile, NatWest is embedding Watsonx into its chatbot to improve the customer experience. “We believe the opportunity for large language models for enterprise companies is immense given the existing amount of business data,” he said.

Regulatory matters have been a focal point of IBM’s cloud offering, but Duigenan sees regulatory use cases for AI as well. “We’ve done a huge amount of work in this space with some large clients, especially around using natural language processing to understand regulations,” he says. “To help on the basis of a regulation, a company forms policies, maps policies and procedures to controls—those controls could be human, operational, technical or preventative.” KYC (know-your-customer) and AML (anti-money laundering) regulations, in particular, could present a good use case as those processes can be arduous and complex.

A look ahead

Some people spoken to in the past believe that the generative AI space among the cloud providers presents as a crowded room. All three providers were asked if they agreed with that statement, and they all disagreed. They pointed to factors separating them from their competitors (without directly naming them).

At the center of all three providers’ offerings is the idea of optionality. Foundational models allow users to build their own AI tools, whether it’s a chatbot or a digital assistant. All three have recognized that giving choice is the best strategy—the notion that there will be one model to rule them all is unlikely to transpire.

They also all recognize the importance of security. All three highlighted that data used to create AI tools never leaves the environment it sits in, and that’s incredibly important as data leakage becomes a concern in this space. These are cloud providers after all, and they play to their strengths building out their AI tools inside their cloud offerings.

Explainability and factuality are also common themes. In other words, all three are moving in the right direction and have set up the proper foundation for innovation as this moment ramps up.

And while on the surface, it looks like these providers are providing very similar things, it may be what’s under the hood that makes the difference, says Celent’s Summerville. “You’d have to look at what each provider is giving you in terms of a proprietary foundation model, but they’re also aligning with different open-source and third-party providers,” she says. “The services they layer on top of that is also going to be a way they can differentiate; how well tools are embedded in the developer workflow is another area.”

As WatersTechnology has noted before, this current moment in AI is following a similar roadmap as cloud. In the not-too-distant past, financial firms embarking on their cloud journeys largely opted to leverage private clouds. That slowly gave way to hybrid private–public models. And now here we are, at a time when some are looking to sunset their datacenters altogether and leave heavily on the expertise of Big Tech.

As Summerville sees it, it could come down to “horses for courses”.

“With horse racing, you’d have different horses that run the flat versus jump course. If you are going to back a horse, you have to make sure that horse runs on that course really well,” she says. “So the expression ‘horses for courses’ refers to this and it also works here to describe matching your cloud provider with your use case.” In other words, in the same way capital markets firms have looked to different providers for certain tools in the cloud, they could do the same for generative AI and large language models.

With additional reporting from Anthony Malakian

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Printing this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Copying this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

More on Emerging Technologies

Quants look to language models to predict market impact

Oxford-Man Institute says LLM-type engine that ‘reads’ order-book messages could help improve execution

The IMD Wrap: Talkin’ ’bout my generation

As a Gen-Xer, Max tells GenAI to get off his lawn—after it's mowed it, watered it and trimmed the shrubs so he can sit back and enjoy it.

This Week: Delta Capita/SSimple, BNY Mellon, DTCC, Broadridge, and more

A summary of the latest financial technology news.

Waters Wavelength Podcast: The issue with corporate actions

Yogita Mehta from SIX joins to discuss the biggest challenges firms face when dealing with corporate actions.

JP Morgan pulls plug on deep learning model for FX algos

The bank has turned to less complex models that are easier to explain to clients.

LSEG-Microsoft products on track for 2024 release

The exchange’s to-do list includes embedding its data, analytics, and workflows in the Microsoft Teams and productivity suite.

Data catalog competition heats up as spending cools

Data catalogs represent a big step toward a shopping experience in the style of Amazon.com or iTunes for market data management and procurement. Here, we take a look at the key players in this space, old and new.

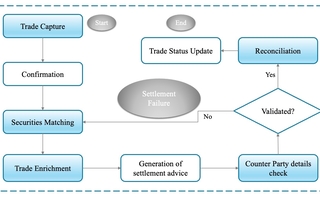

Harnessing generative AI to address security settlement challenges

A new paper from IBM researchers explores settlement challenges and looks at how generative AI can, among other things, identify the underlying cause of an issue and rectify the errors.