Large language models: Another AI wave has come—what could it bring?

Since the release of ChatGPT, excitement and hype have been abundant across industries for this form of generative AI. For capital markets, the wave of innovation that could result may be a few years away but it’s worth paying attention to—and being critical of.

Need to know

As the December holiday break loomed, a new tool released by OpenAI had engineers, analysts, programmers and even writers like Anthony Malakian wondering what the future of their professions might hold. Click here to read more.

Steve Rubinow is no stranger to evolution in technology. He oversaw the changing landscape of exchange technology at the New York Stock Exchange as its chief innovation and technology officer in the 2000s and 2010s. He saw the dot.com boom up close and personal when he made the jump from a job at Fidelity Investments to get in on the ground level of Silicon Valley’s meteoric rise. Most importantly, he’s seen the potential in artificial intelligence technology before.

“We’re in that mode, again, where there’s a lot of good stuff going on—you can’t dispute that,” he says.

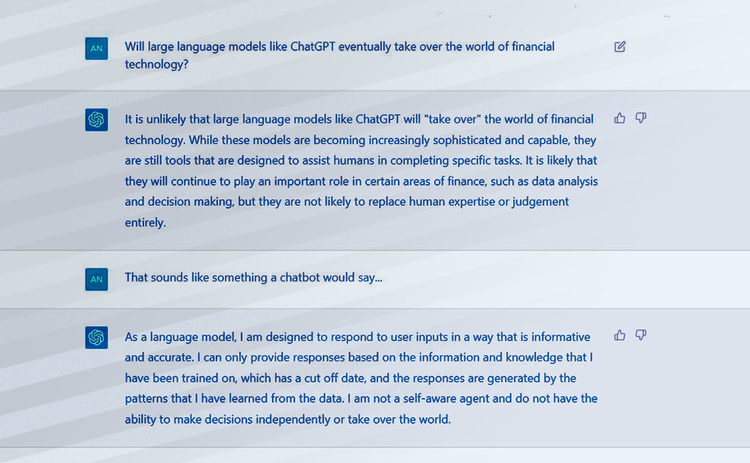

That good stuff includes ChatGPT, or Chat Generative Pre-trained Transformer, the chatbot that everyone seems to be talking about. Released to the public on November 30, 2022, as a research preview by artificial intelligence laboratory OpenAI, the chatbot is heating up the conversation around generative AI and what it can offer users.

For capital markets, the impact that ChatGPT and the technology underpinning it could have is not immediately clear as the tech is in what many see as it’s “first generation”. But as the tech evolves and hurdles get cleared, everything from the role of the analyst to the way critical infrastructure is built could be disrupted along the way.

ChatGPT is a sibling model to OpenAI’s InstructGPT, which was released in January last year. InstructGPT was trained to follow directions from a user better than GPT-3, another OpenAI model, which is more inclined to complete the next word in a dataset. ChatGPT goes one step further in offering a more conversational interface than its sibling model. Simply put, ask the chatbot a question and it will answer with a human-speech-like response that it generates from connecting patterns based on its training data.

ChatGPT is built atop the the GPT-3.5 large language model (LLM) developed by OpenAI and has been trained using both supervised and reinforcement learning techniques. GPT-3.5’s predecessor, GPT-3 or Generative Pre-trained Transformer 3, was trained on a gargantuan dataset—175 billion machine-learning parameters.

LLMs are AI models trained on huge swaths of text with the goal of being able to make connections and recognize patterns that will allow it to do specific tasks. To train a model, a variety of sentences, either incomplete or full, are fed into a neural network. Words in a sentence are masked out, and it’s the task of the network to figure out what the missing word is based on sentences it has already seen. As an example, the full sentence may have been “this is a yellow car,” with yellow masked out and the network being fed data about New York City cabs. In the case that the network does not guess yellow, the parameters of neural network will be tweaked so it can get closer to the answer.

I think what we’re going to see is the same technology being trained on both industry data, or industry vertical data, and also private data, so that it can become a much deeper expert in certain areas—like, for example, financial services

Tim Baker, Blue Sky Thinking

GPT-3.5 is just one LLM developed by AI researchers in the last few years. The GPT models are currently not open-sourced, but others from Google, such as Bidirectional Encoder Representations from Transformers, or Bert, have been. When Google published Bert, it supplied the code and downloadable versions of the model already pre-trained on Wikipedia and a dataset called BookCorpus, consisting of roughly three million words in total.

“The solution [ChatGPT] as it is today, it’s built upon a large amount of public information,” says Tim Baker, founder of consultancy Blue Sky Thinking and financial services practice lead at software provider Expero. “And I think what we’re going to see is the same technology being trained on both industry data—or industry vertical data—and also private data, so that it can become a much deeper expert in certain areas—like, for example, financial services.”

That leaves a potential door open for a ChatGPT-like solution, trained on industry data and the private data of institutions, that could provide theoretical research and valuation reports like the function of a modern-day analyst. Some say the functions of the analyst have already gone through monumental changes.

“If you look at what’s going on in capital markets, you know the role of the analyst, it’s already changing. They used to crunch all the numbers by themselves—they don’t anymore,” says a senior executive at a very large data provider who did not have permission to speak publically on this subject. “Now you have all this information coming to you that’s at your fingertips, you don’t have to do all the number crunching yourself.”

They say the ability to take ChatGPT or a similar version of it and train it on private data and run it internally, like the running of one’s own private datacenters, could create something that makes recommendations or decisions, going beyond putting a report together. Its efficiency would rely on inputting the correct level of information and asking the right question.

But they also acknowledge that feasibility and financial constraints prevent this sort of system existing currently.

“LLMs are hard to scale and they’re really, really expensive,” adds a machine learning engineer at a different very large financial data and technology provider. “And you’re basically boiling the ocean to make these models—[there are] trillions of parameters in the model. There are all sorts of custom hardware being built to run them right now. But they do represent a significant leap in technology.”

Coders look out?

One area that is seeing significant buzz around ChatGPT is coding. Do a quick google search for “ChatGPT coding” and you’re met with an abundance of videos and blog posts with titles like “ChatGPT tutorial: How to easily improve your coding skills with ChatGPT” and “Here’s how to 10X your coding skills with ChatGPT.”

Blue Sky Thinking’s Baker says that the chatbot has shown it’s capable of writing half-decent code and that it’s only a matter of time before developers use it to write code faster and even test it that way. “If you’re in an algorithmic world, it’s a big productivity booster for you and it might also make those investment strategies more accessible, because more and more people will be able to build strategies.”

In the world of hedge funds and asset management, investment models are being built—either rules-based or valuation-based—and building those models takes time. “Some hedge funds have been doing it for years. They’ve got vast numbers of data scientists writing Python and constantly building and evolving platforms,” Baker says. For an industry that is an intensive user of computing and software, capabilities like ChatGPT could allow faster and easier builds over time.

It could also prove a potential acceleration tool for the replacement of legacy code. “There’s huge amounts of legacy code, very old systems that are 20-plus years old, written on programs like Cobol. And the number of Cobol programmers is falling every day as they retire, so there’s a lot of infrastructure running on relatively old code,” Baker says. “These types of tool are very good at doing things like rewriting this code in Java or C\# or your current preferred languages, so I think it will be quite good at doing those types of project. That might accelerate the modernization that’s going on and has been going on in the industry for many years.”

But this doesn’t necessarily mean any developer’s job is in danger of being replaced by a computer. “I believe this is going to be a net add, and it’s not going to take things away,” says the senior executive at the data provider. “It will be an additional tool, an additional capability.”

They add: “I think if we fast-forward enough into the future, people won’t be writing things from scratch—you will be writing on higher concepts, and reusability. So there will be packages, there will be tooling, there will be capabilities available, but you’ll need to kind of splice them together to make bigger, better things out of it.”

Humans in the loop

Though the realm of possibilities for what technology like ChatGPT could provide seems to focus on increased productivity, some say it will remain beneficial to keep a human involved alongside it.

“With financial services, everything is so locked down because you’re talking about moving people’s money around. So is it really a good idea to have a system or computer write code for another computer and then test that code? It feels like you’re still going to want a human in the loop,” Baker says.

Rubinow, now a lecturer at the Jarvis College of Computing and Digital Media at DePaul University, says that while the chatbot is impressive, some people may ascribe to it a certain level of credibility that maybe it doesn’t yet deserve. “We need people to look at everything with a critical eye. And now, of course, it helps if they understand the material they’re looking at. Because if they ask ChatGPT about things they’re not expert in, they’d have a hard time discerning mistakes, or errors, or ambiguous statements,” he says.

The chatbot might be best served by working with an expert user, Rubinow says: someone who knows the subject matter and can apply critical thinking based on their own experience. “They can separate the stuff that really is useful and makes sense [from] the stuff that’s maybe questionable or dubious, or, in some cases, plain wrong,” he says.

Humans are much better today at dealing with uncertainty, edge conditions and novel conditions

Steve Rubinow, DePaul University

“When I worked in the exchange world, people used to say to me, why does anyone ever need a trading floor? Computers talking to computers, high-frequency trading, all that stuff—why do you need a trading floor? As the computers talk to each other across the network, why have humans in the middle of the loop? What I said is that … when faced with a brand-new set of scenarios that the software has never seen before, it might either try and push the data it’s just received into the model that it was trained on—and maybe get good results and maybe erroneous results—or it will say, depending on the program, the parameters you’ve given me don’t match anything that I historically have been trained on, so I can’t give you an output. Humans are much better today at dealing with uncertainty, edge conditions, and novel conditions.”

Vall Herard agrees. Herard is the chief executive officer and co-founder of Saifr, incubated from Fidelity Labs that’s looking to apply AI to regulatory and compliance use cases. “In industries where there is not only standardization but also interpretation of the rules based on risk appetite, that is a human endeavor that will always involve humans,” he says. “And because artificial intelligence is not capable of that self-reflection, it’s not going to be able to replace that expert judgement.”

Expert judgement will be crucial as more content is generated, potentially for analysts, says Shameek Kundu, former chief data officer at Standard Chartered and now head of financial services and chief strategy officer at AI firm Truera.

“One of the problems is there will be a lot more noise out there in terms of content, because ChatGPT is speaking from publicly available data sources, and there will be a lot more machine-generated or barely human-intermediated content,” he says. “Part of what a good analyst will have to do is to figure out the wheat from the chaff. How much of this can I trust? Do I need to double check?”

The current public ChatGPT interface offers example prompts as well as capabilities of the chatbot. They include the ability to remember what the user previously said in a conversation, the ability for the user to provide follow-up corrections, and training to decline inappropriate requests. There are also limitations—occasionally inaccurate information, biased content or harmful instructions, and the disclaimer that bot has limited knowledge of world events after 2021. Regrettably that means ChatGPT might not be aware that a head of lettuce lasted longer than Liz Truss’s term as prime minister of the UK.

“People are expecting the moon, the sun and the stars based on what they read in the media, and then they let their imagination get carried away with it,” Rubinow says. “They listen to prospective investors who want to make it sound even more exciting. You have to figure out for yourself what the advantages are and what the limitations are.”

“If all you’re doing is just solving an existing problem slightly better with new technology, it’s difficult to make the mark, so there was a bit of shakiness about whether AI had kind of plateaued again,” Kundu says. But he’s also optimistic. “What really excites me is that ChatGPT caused a lot more excitement about this space. Hopefully, it will lead to more interesting use cases.”

With additional reporting from Wei-Shen Wong

Further reading

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Printing this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Copying this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

More on Emerging Technologies

Quants look to language models to predict market impact

Oxford-Man Institute says LLM-type engine that ‘reads’ order-book messages could help improve execution

The IMD Wrap: Talkin’ ’bout my generation

As a Gen-Xer, Max tells GenAI to get off his lawn—after it's mowed it, watered it and trimmed the shrubs so he can sit back and enjoy it.

This Week: Delta Capita/SSimple, BNY Mellon, DTCC, Broadridge, and more

A summary of the latest financial technology news.

Waters Wavelength Podcast: The issue with corporate actions

Yogita Mehta from SIX joins to discuss the biggest challenges firms face when dealing with corporate actions.

JP Morgan pulls plug on deep learning model for FX algos

The bank has turned to less complex models that are easier to explain to clients.

LSEG-Microsoft products on track for 2024 release

The exchange’s to-do list includes embedding its data, analytics, and workflows in the Microsoft Teams and productivity suite.

Data catalog competition heats up as spending cools

Data catalogs represent a big step toward a shopping experience in the style of Amazon.com or iTunes for market data management and procurement. Here, we take a look at the key players in this space, old and new.

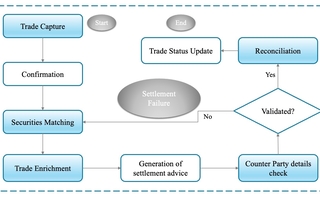

Harnessing generative AI to address security settlement challenges

A new paper from IBM researchers explores settlement challenges and looks at how generative AI can, among other things, identify the underlying cause of an issue and rectify the errors.