What does it take to put a database in the cloud?

There are several ways to migrate an old legacy system to the cloud, but there's always a trade-off.

In 2016, Moody’s Analytics surveyed its client base—a sampling of “very conservative” financial services companies—about whether they’d like to see Moody’s solutions be moved to the cloud. Three-quarters of respondents said no.

The story was recalled by Anna Krayn, head of global industry practice at Moody’s Analytics, who spoke during the opening remarks at Amazon Web Services’ 2023 Cloud Symposium, held last week in New York.

The same survey administered today would surely yield different results, as evidenced by AWS’s showcase of financial tech and data leaders who not only count the cloud giant as a tech and business partner, but say so, on the record, at a conference filled with their peers. From Moody’s, to Nasdaq, to HSBC, to KKR, financial firms are firmly in, or on their way to, the cloud. And while it’s easy to find mentions of its merits and benefits—resiliency, elastic scalability and cost savings, to name a few—it’s harder to clearly see the long, work-intensive road to reach the promised land that cloud offers, unless you’re walking it yourself.

There’s a tier-one bank in New York that has end-of-day prices back to 1896. You can picture the person taking that old ticker tape and manually putting it into a database system

Conor Twomey, KX

One of Moody’s Analytics’ cloud projects currently underway is the migration of Orbis, a massive entity database of more than 450 million companies, to AWS. The planning for it began in early 2022 and migration work began in the middle of last year, said Dominque Gribot-Carroz, global head of customer experience, who hosted a talk at the symposium.

As of March this year, 55% of clients had been migrated to the cloud, with the rest expected to be running there by year end. To put that in perspective, the scale of the migration will cover 9,000 accounts, tens of thousands of users, and hundreds of thousands of downstream processes. Gribot-Carroz said the entire journey demands a delicate balancing act between rehosting and refactoring, as well as Moody’s and its clients’ respective risk appetites, and more services are slated to be made cloud-native following the completion of Orbis.

Pick a plan

To move an old, massive database to the cloud, there are a couple possible approaches—and there is a worst choice, says Conor Twomey, head of customer success at KX, a time series database and analytics engine for capital markets businesses and other verticals.

The first “deliberately not good” approach is to rip everything down and rebuild everything—including decades of business logic—from scratch natively to the cloud. But that’s time-consuming, risky, and involves running two systems in parallel.

A route that’s often easier for innovating companies to stomach is the lift-and-shift, in which services and products get picked up and dropped into the cloud. But this method trades off the native features that make running in the cloud competitive—microservices, APIs, containers and mesh architectures, to name a few.

“Rather than it being a server with your own logo on it, it’s just a server with an AWS logo on it,” Twomey says.

Twomey and KX, which has a partnership with AWS, have adopted the crawl–walk–run method.

“There’s a tier-one bank in New York that has end-of-day prices back to 1896. You can picture the person taking that old ticker tape and manually putting it into a database system,” he says.

Having data that old is typical for KX’s capital markets clients. But on top of it is high-definition data of every order and trade intent for the last seven to 10 years. Taken together, it’s often five or more petabytes of just market data that banks are storing in-house, never mind their security master data, corporate actions, or order execution data.

Know your data usage

Twomey works on getting customers to understand that all that data, most of which is at rest, doesn’t need to be stored in-house, nor does it all need to be stored in the cloud—and the balance can be struck by understanding your data usage.

“If I have sufficient telemetry to understand how my data assets are actually being used, in most cases you find that 95% of all queries against that entire data store is just for the last three months of data,” he says. “What cloud enables you to do is have access to tiered storage—dynamically allocated tiered storage.”

Rather than having a local disk or a network-attached storage device, a bank can take its years of old data-at-rest, heavily compress it, and put it into a service like Amazon’s S3, while leaving their on-premises systems alone.

Last year, WatersTechnology reported that the London Stock Exchange’s Refinitiv would make its Real-Time Full Tick data available on the public cloud this year. Before that, it reported that Morningstar would move its own tick data platform—consisting then of about 2.5 petabytes of tick-level market data—to the cloud.

As demand for cloud-based data storage and delivery systems has grown, banks, asset managers, and their vendors have moved to meet it and even exceed it, as was seen with major exchanges Nasdaq, CME Group, Cboe, and LSEG inking high-profile, long-term deals with AWS, Google Cloud, Snowflake, and Microsoft Azure, respectively.

While the rapid trudge toward the cloud assures that end-users find promise in the technology, others in the market still hold reservations, particularly around vendor lock-in, potential price hikes, and concentration risk. While firms can expect to wind down or remove some dependency on legacy systems, the management fees for running cloud-based applications and pipes that connect to them can add up, in addition to direct expenses such as the cost of compute power, the network, and the storage—while simultaneously running on-premises applications elsewhere.

In perhaps the lone example of a big-name industry player bucking the cloud trend, a senior executive at the Intercontinental Exchange told attendees at FIA Boca in March that the exchange had no plans to outsource any critical infrastructure to a third-party cloud provider, adding that the CEO and CTO were confident the exchange was better placed than Big Tech companies to manage data-storage projects.

For now, though, the genie is out of the bottle, and if the roster of AWS conference attendees is any indication, many of those at the forefront of the industry are going full speed ahead, whether they harbor concerns or not.

Further reading

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Printing this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Copying this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

More on Emerging Technologies

Quants look to language models to predict market impact

Oxford-Man Institute says LLM-type engine that ‘reads’ order-book messages could help improve execution

The IMD Wrap: Talkin’ ’bout my generation

As a Gen-Xer, Max tells GenAI to get off his lawn—after it's mowed it, watered it and trimmed the shrubs so he can sit back and enjoy it.

This Week: Delta Capita/SSimple, BNY Mellon, DTCC, Broadridge, and more

A summary of the latest financial technology news.

Waters Wavelength Podcast: The issue with corporate actions

Yogita Mehta from SIX joins to discuss the biggest challenges firms face when dealing with corporate actions.

JP Morgan pulls plug on deep learning model for FX algos

The bank has turned to less complex models that are easier to explain to clients.

LSEG-Microsoft products on track for 2024 release

The exchange’s to-do list includes embedding its data, analytics, and workflows in the Microsoft Teams and productivity suite.

Data catalog competition heats up as spending cools

Data catalogs represent a big step toward a shopping experience in the style of Amazon.com or iTunes for market data management and procurement. Here, we take a look at the key players in this space, old and new.

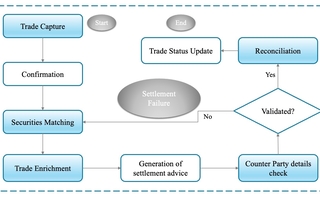

Harnessing generative AI to address security settlement challenges

A new paper from IBM researchers explores settlement challenges and looks at how generative AI can, among other things, identify the underlying cause of an issue and rectify the errors.