New chatbots reveal limitations of legacy API development

As large language models that underpin the likes of ChatGPT and Bard come to market, vendors and trading firms are starting to see the benefits—and challenges—that open APIs provide.

As 2022 came to a close, OpenAI’s ChatGPT burst onto the scene and captured everyone’s attention. Nearly four months later, that audience is still enrapt—even if bank compliance departments aren’t as enamored.

Released to the public on November 30, 2022, as a research preview by artificial intelligence laboratory OpenAI, the chatbot heated up the conversation around generative AI, what it can offer users, and the use of the large language models (LLMs) that underpin ChatGPT. For capital markets, the impact that ChatGPT and the technology underpinning it could have was not immediately clear as the technology is in what some see as its first generation. In January, industry participants told WatersTechnology that as the tech evolves and hurdles get cleared, it could disrupt everything from the role of the analyst to the way critical infrastructure is built.

Since ChatGPT’s release, more chatbots have appeared and so have some capital markets-specific roadblocks. In February, Google revealed its LLM chatbot, Bard, and in March made early access available. This comes on the heels of Microsoft’s extended investment and collaboration with OpenAI to further integrate AI into its tech stack. But in late February, Bloomberg reported that compliance departments at JP Morgan, Goldman Sachs, and Citi were restricting access to ChatGPT due to potential issues around vetting of third-party software and data security.

While the compliance questions will continue to mount, one thing that has come into greater focus is the need for greater consideration when developing and using application programming interfaces.

Simply put, APIs act as intermediaries to allow applications to talk to each other and can be data delivery mechanisms that allow firms to easily transfer data from one place to another. They aren’t always created equally, especially in finance, and ChatGPT is exposing some of those gaps.

Buy-side technology provider Finbourne was founded using what CEO and co-founder Thomas McHugh calls an API-first approach, meaning developers think of APIs almost as individual products that make up a platform, rather than something that is trapped in a larger system. The tech provider has two platforms: LUSID and Luminesce. Lusid, through open (or, publicly available) APIs, takes in data across operational stacks and provides real-time positions, while Luminesce is a data virtualization platform providing a data fabric for analytics and insights.

People should not fall into a false hope or pipe dream that you can take whatever ChatGPT does and put it into production. But the upside of this is that it’s much easier to test code than it is to test the validity of a position paper

Gabriele Columbro, Finos

Finbourne has hundreds of public APIs built in compliance with OpenAPI specifications and published on Swagger, a toolkit for API developers. The OpenAPI Specification is a standard interface to Rest APIs that allows humans and computers to discover and understand service capabilities without access to source code, documentation, or through network traffic inspection. The standard began as an open-source initiative in 2015 under the Linux Foundation, led by giants such as Google, IBM, Microsoft, and others.

“The data that ChatGPT was trained with includes our API specs via the Common Crawl search engine,” McHugh says. GPT-3, OpenAI’s first notable large language model that turned heads in 2020, is trained on a number of data sources, including a filtered version of Common Crawl, a dataset available via a non-profit of the same name. Common Crawl “crawls” the internet every month to provide data and information previously only available to search engine companies. GPT-3 was followed by GPT-3.5 and now GPT-4, which was released last week.

Public vs. proprietary

Here is where it gets interesting: Type “create a cash ladder using LUSID code” into ChatGPT and the chatbot will follow the command and do exactly that.

“A lot of firms claim to do the same, but the ChatGPT query is a perfect example of separating the wheat from the chaff,” McHugh says. “If you try to run the same query with other investment management software providers in the space, it will simply tell you that it can’t, because the software is proprietary.”

He says Finbourne’s open approach since its inception has allowed it to make investment technology accessible and able to support productivity and flexibility in client operations as well as counter single vendor dependency.

“We want to keep our focus on what we do best and enable them to have the optionality over how and where they augment their data, in the wider ecosystem. This is why public documentation and open-source tools like GitHub and Swagger are an important part of our methodology,” McHugh says. “What tools like these and ChatGPT enable is innovation at a much faster rate, and that is a game changer.”

He says firms can test, fail and test again to get to the end state promptly. “This right-to-fail approach is the best kind of innovation, because you have proved it can actually stand up in the real world,” he says.

There are some caveats, as ChatGPT still has some limitations. “It can make up end points that we simply don’t have and infer things inaccurately,” McHugh says. But he says it’s a great start for propelling productivity.

Gabriele Columbro, executive director of the Fintech Open Source Foundation (Finos) says it’s important to recognize that ChatGPT is not 100% correct while utilizing it—again, these chatbots are new and are still being improved upon and tested out. “People should not fall into a false hope or pipe dream that you can take whatever ChatGPT does and put it into production,” he says. “But the upside of this is that it’s much easier to test code than it is to test the validity of a position paper.”

Migael Strydom, CTO at Theia Insights, an AI startup focused on fact-based LLMs for financial services, sees an advantage for open APIs, but also recognizes gaps.

“APIs with public documentation will definitely benefit compared to APIs with closed or less accessible documentation. However, the fact that it’s part of the Common Crawl won’t necessarily be enough,” he says. “The data cutoff date for GPT-4 is apparently late 2021, so APIs that have been modified since then won’t be part of the training data. In that case, the user will have to paste the API documentation into the model’s prompt.”

Prompting a model is not always very straightforward, he adds. The model’s context window is a limitation, which means longer documentation won’t necessarily fit. Additionally, how well the model understands the documentation can depend on subtle changes to the wording or the examples used. He says “prompt engineer” is now a position some companies have started hiring for.

“It’s a skill sort of like googling was in the early days—some people could use Google really well because they knew what sort of keywords to enter into the search bar,” Strydom says. “I wouldn’t be surprised if in the near term, some API providers also publish ‘API prompts’—a prompt that they have crafted that is known to work well with certain code-generating chatbots.”

The race for discovery

Chatbots assisting developers isn’t new. GitHub, one of the largest open-source code repositories, unveiled its Copilot AI assistant in June 2021. GitHub is owned by Microsoft and Copilot’s AI was developed in partnership with OpenAI using OpenAI Codex, a modified version of GPT-3. “It gives you code completion; it writes the function for you; and it’s basically trained on the corpus of existing open-source repositories that they have under GitHub,” Columbro says. “It helps you write code and that’s only possible so far as the code is open, the API is open. Anything proprietary and it wouldn’t be able to do that.”

But that development has also come with risks.

Last year, a few weeks before ChatGPT’s rollout, a class-action lawsuit was filed in the Northern District of California alleging that GitHub, its owner Microsoft, and OpenAI had violated copyright law by training its AI on open-source code and then not giving credit when the AI assistant reproduced the code later. All three defendants filed a motion in late January to have the lawsuit thrown out with a hearing on the matter scheduled for May. Observers say the suit is one to watch as the world continues to be captivated by generative AI.

New questions (and potentially court cases) will continue to be raised around LLMs. What’s clear, though, is that ChatGPT was a breakthrough decades in the making and the floodgates are opening, and with that will come further experimentation and, yes, confusion.

The reason trading firms are so keen to use these new types of chatbots is, yes, to potentially make an analyst or portfolio manager more efficient. But what they also provide is the promise of an augmenting assistant in every company’s growing need to provide context and analysis more easily around a rapidly rising sea of data.

At the same time, as APIs become increasingly popular on Wall Street as a data delivery mechanism, vendors and end-users, alike, will have to consider the sustainability of their API development strategies as new innovations come to the forefront—in this case, the ability to tap into the promise of LLM chatbots.

While capital markets firms and systems were historically closed-off gardens, ChatGPT, Bard, and their future brethren are just further examples that the future of finance is wide open—and in so many different ways.

Further reading

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Printing this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Copying this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@waterstechnology.com

More on Emerging Technologies

Quants look to language models to predict market impact

Oxford-Man Institute says LLM-type engine that ‘reads’ order-book messages could help improve execution

The IMD Wrap: Talkin’ ’bout my generation

As a Gen-Xer, Max tells GenAI to get off his lawn—after it's mowed it, watered it and trimmed the shrubs so he can sit back and enjoy it.

This Week: Delta Capita/SSimple, BNY Mellon, DTCC, Broadridge, and more

A summary of the latest financial technology news.

Waters Wavelength Podcast: The issue with corporate actions

Yogita Mehta from SIX joins to discuss the biggest challenges firms face when dealing with corporate actions.

JP Morgan pulls plug on deep learning model for FX algos

The bank has turned to less complex models that are easier to explain to clients.

LSEG-Microsoft products on track for 2024 release

The exchange’s to-do list includes embedding its data, analytics, and workflows in the Microsoft Teams and productivity suite.

Data catalog competition heats up as spending cools

Data catalogs represent a big step toward a shopping experience in the style of Amazon.com or iTunes for market data management and procurement. Here, we take a look at the key players in this space, old and new.

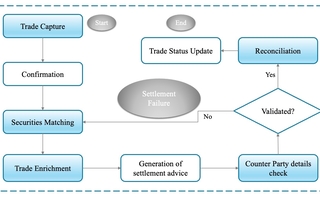

Harnessing generative AI to address security settlement challenges

A new paper from IBM researchers explores settlement challenges and looks at how generative AI can, among other things, identify the underlying cause of an issue and rectify the errors.